[ad_1]

The rise of biometric identification and verification systems deployed in the edge infrastructure has created a gap in accurate and efficient facial recognition models. Traditional facial recognition technology based on deep convolutional neural networks (DCNN) has limitations. These include susceptibility to external factors like occlusions, variations in lighting conditions, and facial expressions, which can compromise the accuracy of identification. Therefore, engineers need a new method that can overcome these challenges.

Engineers need a face attribute recognition technology that uses optimized feature extraction and fusion techniques to improve accuracy further. These techniques involve extracting unique features from facial images and their combination to create a comprehensive representation of the facial attributes. In addition, the software model must support improvements in information flow between features at different scales. The “Research on Face Attribute Recognition Technology Based on Fine-Grained Features” paper proposes a solution to these challenges.

The new facial recognition method from Yizhuo Gao of China’s Jilin Police College’s Department of Criminal Science and Technology utilizes bilinear pooling in a DCNN to extract facial features. It involves setting up networks at three different scales to capture multiscale features. This multiscale approach ensures that features are extracted at various resolutions, capturing global and local attributes of the face. Features extracted from different convolutional layers within the network are combined to form a holistic feature set.

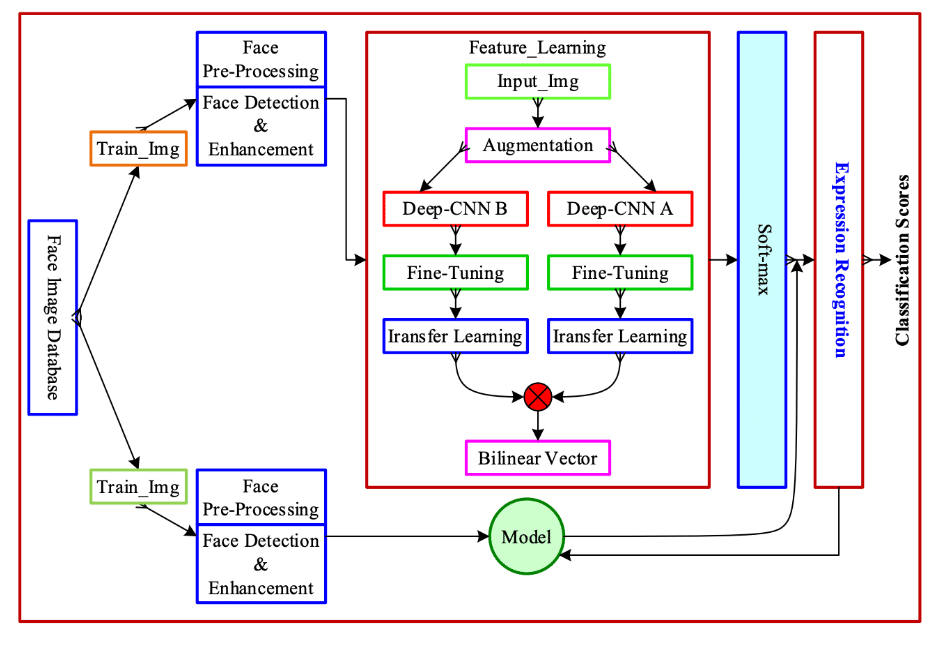

Figure 1. Architecture of facial recognition method using the deep convolutional neural network

Figure 1 shows the architecture process for a facial expression recognition system, which begins with a database of face images intended for training the model. The model has six components.

The initial face pre-processing stage involves pre-processing the image, which can be done through face detection and improvement in image quality or cropping the image only to include the face to prepare the data for feature learning.

At the feature learning step, the network processes individual images to learn various facial expressions. Techniques such as rotation, scaling, and color adjustment diversify the training dataset. The images are then passed through two separate convolutional neural networks operating in parallel for feature extraction. These DCNNs, often used for transfer learning, combine the extracted features to improve the model’s performance.

Following feature learning, the combined output is fed to a softmax layer, responsible for the final prediction in the classification tasks.

In parallel to the learning, a pre-trained model analyzes the input image from the database to make a prediction. This model prediction is integrated with the output from the softmax layer to enhance prediction accuracy.

The next step is expression recognition, the final step where the facial expression is classified.

The model then outputs a classification score for each class of facial expression, which, after the softmax layer, can be interpreted as the probability that indicates the likelihood of each facial expression.

Experimental Results

The study compared the performance of their facial recognition technique with existing algorithms, such as VGG16-SSN, VGG16-PSN, and APS, focusing on attributes such as physical facial features and overall facial structure. The results shown in Figure 2 reveal that the average accuracy rates for VGG16-SSN, VGG16-PSN, APS, and the proposed method are 86.79 percent, 87.13 percent, 91.55 percent, and 97.11 percent, respectively.

Figure 2. Accuracy of face recognition models

The paper credits its method’s superior accuracy to its use of global and local features, facilitated by a combination of a shared sub-network and two task-specific sub-networks. This approach not only enhances accuracy over the APS algorithms but also addresses the challenges of low-resolution facial recognition.

The research examines how the method performs across a spectrum of resolutions, ranging from 15×15 to 100×100 pixels. Recognizing faces at lower resolutions, such as 15×15 to 30×30, is particularly challenging due to the lack of image details. However, the paper’s algorithm achieved a 54.03 percent accuracy rate at the lowest resolution of 15×5. Figure 3 presents data on the relationship between image resolution and the accuracy rate.

Figure 3. Comparison of accuracy rate for different resolutions

Conclusion

In today’s biometric applications, there is a constant need for power-efficient and highly accurate facial recognition models suitable for deployment on resource-constrained edge devices. Where traditional CNN-based models fell short, according to Gao, the new generation of facial recognition methods excels by leveraging detailed facial attributes for both global and local feature extraction. Implementing these algorithms ensures the desired level of accuracy in challenging environments where external factors may otherwise compromise the performance.

Article Topics

accuracy | biometric identification | biometrics | biometrics at the edge | biometrics research | facial recognition

[ad_2]

Source link